Searching the Help

To search for information in the Help, type a word or phrase in the Search box. When you enter a group of words, OR is inferred. You can use Boolean operators to refine your search.

Results returned are case insensitive. However, results ranking takes case into account and assigns higher scores to case matches. Therefore, a search for "cats" followed by a search for "Cats" would return the same number of Help topics, but the order in which the topics are listed would be different.

| Search for | Example | Results |

|---|---|---|

| A single word | cat

|

Topics that contain the word "cat". You will also find its grammatical variations, such as "cats". |

|

A phrase. You can specify that the search results contain a specific phrase. |

"cat food" (quotation marks) |

Topics that contain the literal phrase "cat food" and all its grammatical variations. Without the quotation marks, the query is equivalent to specifying an OR operator, which finds topics with one of the individual words instead of the phrase. |

| Search for | Operator | Example |

|---|---|---|

|

Two or more words in the same topic |

|

|

| Either word in a topic |

|

|

| Topics that do not contain a specific word or phrase |

|

|

| Topics that contain one string and do not contain another | ^ (caret) |

cat ^ mouse

|

| A combination of search types | ( ) parentheses |

|

Understand the IDE

IDE Terms

-

IDE: Integrated Development Environment. The Eclipse workspace where you can create or extend content using modeling tools in the relevant designers.

Note The path to the workspace should not contain blank spaces. For example: C:/workspaces/ide_workspace/.

- KPI: Key Performance Indicator. KPIs reflect how well the organization is doing in areas that most impact financial measures valued by shareholders, such as profitability and revenues.

- API: Application Programming Interface. A specification intended to be used as an interface by software components to communicate with each other.

- ETL: Extract, Transform, Load. An end-to-end transfer of external source data through several staging layers and into the target layer of the data warehouse. The data warehouse uses the ETL process to extract, consolidate, and transform the source data into a meaningful target model that populates relevant business analytics.

- UDM: Unified Data Model. Provides a bridge between the user and the data sources.

- DWH: Data Warehouse. The data warehouse is an end-to-end solution for extracting source data and consolidating disparate source data models into a consolidated data model. The data organized to reflect this model resides in target tables that become the source for ITBA.

- ITBA: IT Business Analytics. An application that enables executives to continuously improve their business by measuring what happened and what is happening, analyzing that information, and planning new strategies using the gathered information.

-

Data Collection Service (DCS).

DCS enables you to extract data from a specific data source according to a set of instructions. It consists of a plug-able extractor framework for each data source. The extractor gathers data according to the specific instructions it receives, placing it into a relevant .txt flat file. If you want to develop your own extractor for use with extended content, see ..

The supported extractors for the OOTB Content Packs are as follows:

Content Pack Extractor Type PPM(Oracle) DB SM(MSSQL, Oracle, DB2) DB ALM REST API CSA REST API AWS SDK AWSCW SDK AM DB SA DB VPV "REST API" AZURE "REST API" - Entity. An entity is an object of interest included in the data model.

- Designer. A designer in the IDE is the model you create in order to perform content extension and definition. It is a collection of certain entities that may share some relevancy in common. The IDE contains the Architect Target Designer and the Engineer Stream Designer.

-

Content Packs. A content pack is a set of entities from a certain type. There are three types of content packs:

- Source. Contains all of the artifacts needed to connect to the relevant data source and pull data from that data source.

- Integration. Integrates the information from the source, using the DWH schema and loading process in order for the data to be brought to the Target tables. Takes the schema and entities of the source data and transforms it into usable data.

- Target. Defines the content of the Data Warehouse as well as the look of the target tables according to the Unified Data Model (UDM).

IDE Audience

This document assumes basic data warehouse modeling knowledge, understanding of the ETL concepts, good familiarity with databases including the Vertica database.

Requirements for DWH Architects

-

Understanding of Business Intelligence tools, data warehouse modeling and database design principles.

-

ITBA DWH Target schema.

Requirements for DWH ETL Engineers

-

Understanding ETL principles.

-

Familiarity with the source schema.

Requirements for DWH Integration Engineers

-

Java programming skills

Prerequisite Knowledge for Performing Content Development Tasks

-

Unified Data Model (UDM).

-

Target Model: The existing supported model of the DWH

-

Basic architecture of the data warehouse and the advantages of the templates.

-

Basic familiarity with the Integrated Development Environment (IDE).

-

Vertica and data modeling.

IDE End-to-End Extension Scenario

This section provides an end to end scenario.

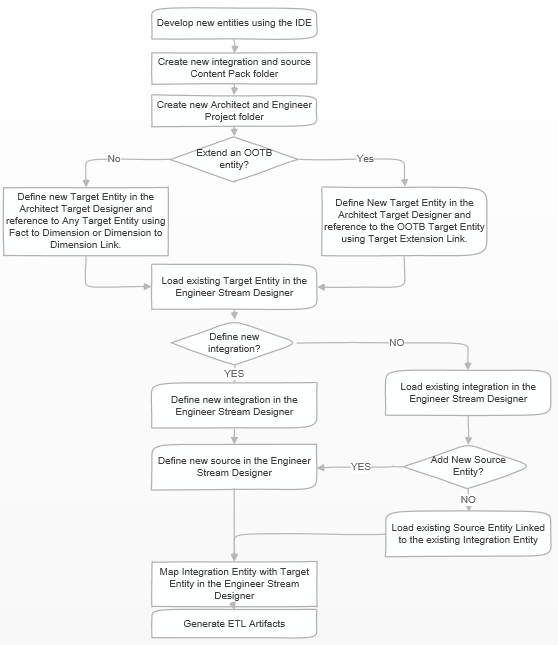

The following illustrates the typical flow, tasks, and procedures in the IDE process. The tasks correspond to the relevant tasks in the Designers.

To extend the existing target entity LOCATION:

-

Create all the required project folders for the new Content Pack.

-

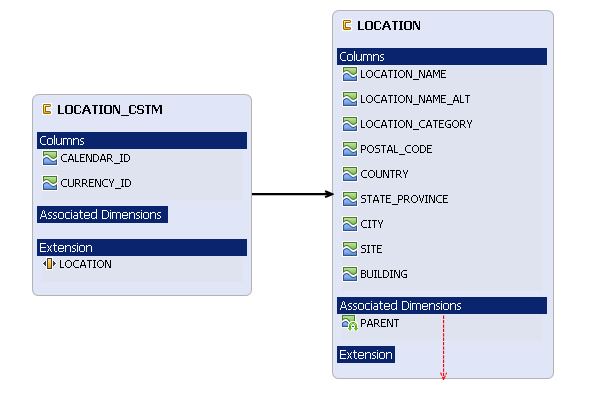

Create the extension entity in the Architect Designer, and then link the extension entity to the OOTB entity using the Target Extension link. For details, see Extend a Target Entity.

In this scenario, add two columns CALENDAR_ID and CURRENCY_ID to the OOTB entity LOCATION.

-

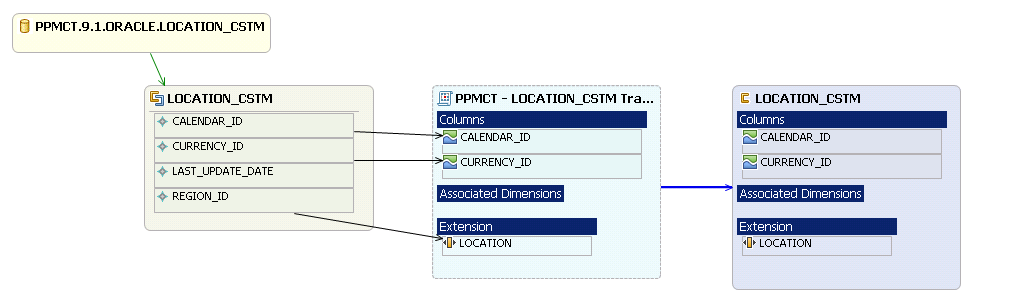

Create a new integration and source entity in the Engineer Designer, then map the columns from integration to target entity. For details, see Diagram Editor - Engineer Stream Designer Tasks.

-

Generate the ETL artifacts.

-

Configure the cross-Content Pack configuration file in the $HPBA_HOME/ContentPacks/PPMCT/CONF/dataSourceParamsConfig.xml file.

For details, see Consolidate Entities - Cross-CP Lookup.

-

In the Context Designer, you can link the new target entity with an OOTB target entity, or you can link them in the customized views. Then the new target entity and the OOTB target entity can be used by the KPI formula.

For details, see Semantic Layer - Context Designer.

Content packs contain all the artifacts needed to connect to the relevant data source and gather data from that data source. The mapping between the raw data from the data source and the target database in the Data Warehouse is included in the ETL.

Content Pack contents

Content Pack contents

Content Packs include:

-

The definition of the data model used for the connection.

-

The type of data gathered from the data source.

-

The ETL definitions.

-

The definition files.

-

The configuration files.

-

The scripts that help build and define the Data Warehouse.

Content Pack Types

Content Pack Types

-

Target Content Pack. Responsible for the transfer of data from staging to the target database. The Target CP defines the content of the Data Warehouse as well as the look of the target tables.

-

Integrated Content Pack (iCP). The iCPs are responsible for bringing the data from the specific data sources and transforming it into the staging level. From there, the Target CP transfers the data to the target. The iCPs integrate the information from the source, using the DWH schema and loading process in order for the data to be brought to the target tables.

Content Pack directories

Content Pack directories

Content Packs contain the following directories:

-

STREAM. Contains files that define the ETL stream at the entity level, and the general flow of steps of the ETL process. The files list the order of the steps as well as the link between the parent and child steps. These files are necessary if you have to add a step to the ETL process.

-

Data Model. The Data Model in the Core Content Pack contains the xml files that define the target schema. The Data Model in the Integration Content Pack contains the xml files that define the staging and extraction schema.

-

ETL. Contains the jobs and workflows of the ETL and defines how data goes through the processes.

-

cp_manifest.properties. The manifest file provides additional information to the Content Pack to allow the system to perform sets of validations.

-

dw_app.properties.

Data Model Types

Data Model Types

Data models describe the source, extraction, consolidation, and target components for a single entity:

- Target Entity. Fact or Dimension: Entity name and column names defined according to the Star-Schema architecture.

- Source Entity. The actual representation of an entity extracted from the source. Generated automatically according to the integration entity design.

- Integration Entity. Entity name, column names, and data types defined according to source table design.

- Column Mapping. Integration to Target column mapping.

Logical Data Models

Logical Data Models

DWH supports the following types of logical data models:

-

Target Data Models:

-

Target Model – Dimension or Fact. The target data models describe the format of the data that produces business intelligence analytics. The instantiated data models are dimension, fact, and hierarchy tables.

-

-

iCP Data Models:

-

Extraction Model. ETL processes obtain the data in native format and stages it in intermediate tables that conform to the extraction data model for that entity. Extraction also validates the data to ensure that it is usable.

-

Source Model. The source entity models represent the design of DII (Data Integration Interface).

-

Physical Data Models

Physical Data Models

DWH logical Data Models generate multiple physical tables that support the ETL processing logic.

-

Source entity models. The source entity models are the data produced by an external application. Used for a specific version of the product.

-

Extraction models. ETL processes obtain the data in native format and stage it in intermediate tables that conform to the extraction data model for that entity. Extraction also validates the data to ensure that it is usable.

-

Consolidation models. Consolidation models transform the data further by merging extracted data from diverse sources into meaningful data required by applications.

-

Target models. The target models describe the final version of the data that adheres to the design required by applications.

Data models describe the source and extraction format so that the data warehouse can consume this data and integrate it into a common view that you can use to populate comprehensive business intelligence reports and dashboards.

Each table contains both designed columns and static columns, also known as metadata columns, so that the administrator can have the visibility of ETL processing over the entity’s data.

Data Warehouse Internal Fields

Data Warehouse Internal Fields

The following are the internal Data Warehouse fields, in addition to those in the IDE, that are added to the model.

| Field Name Prefixes | Description |

|---|---|

| MD_BATCH_ID |

ETL stream ID displayed on all Physical Models. |

| MD_PROCESS_ID | ETL Process ID running the specific job. |

| MD_DURABLE_KEY |

Entity’s unique identifier populated with MD_QUALIFIER which should be considered as the identifier to consolidate records. Displayed only in Consolidation Physical Models |

| MD_ENTERPRISE_KEY |

Entity’s unique identifier in the DWH system. Displayed only in Target Physical Models |

| MD_BUSINESS_KEY |

Entity’s unique key as it comes from the source systems. Displayed only in Target and Extraction Physical Models |

| MD_TRANSLASTIND |

Indicator whether the line is the last transaction of a specific MD_ENTERPRISE_KEY. Displayed only in Target Physical Models |

| MD_ACTIVESTATUSIND |

Indicator whether the line is active (simulates delete operation). Displayed only in Target Physical Models |

| MD_TRANSENDDATE |

Transaction end date. Displayed only in Target Physical Models |

| MD_DELETEDDATE |

Delete date (date record was marked as inactive). Displayed only in Target Physical Models |

| MD_CREATEDDATE |

Date record was created. Displayed only in Target Physical Models |

| MD_LASTMODDATE |

Date record was last modified and updated. Displayed only in Target Physical Models |

| MD_FLAG |

Flag that indicates whether row is new, updated, or requires a delete. Displayed only in Extractions Physical Models |

| MD_PF_FLAG |

Flag that indicates whether row is pre-filled or not. Displayed only in Extractions Physical Models |

| MD_CP_ID | Source instance ID |

We welcome your comments!

To open the configured email client on this computer, open an email window.

Otherwise, copy the information below to a web mail client, and send this email to SW-Doc@hpe.com.

Help Topic ID:

Product:

Topic Title:

Feedback: